Project Information

- Category: Project

- Position: Research Assistant

- Project date: August 2022 - Present

- Publication: arXiv

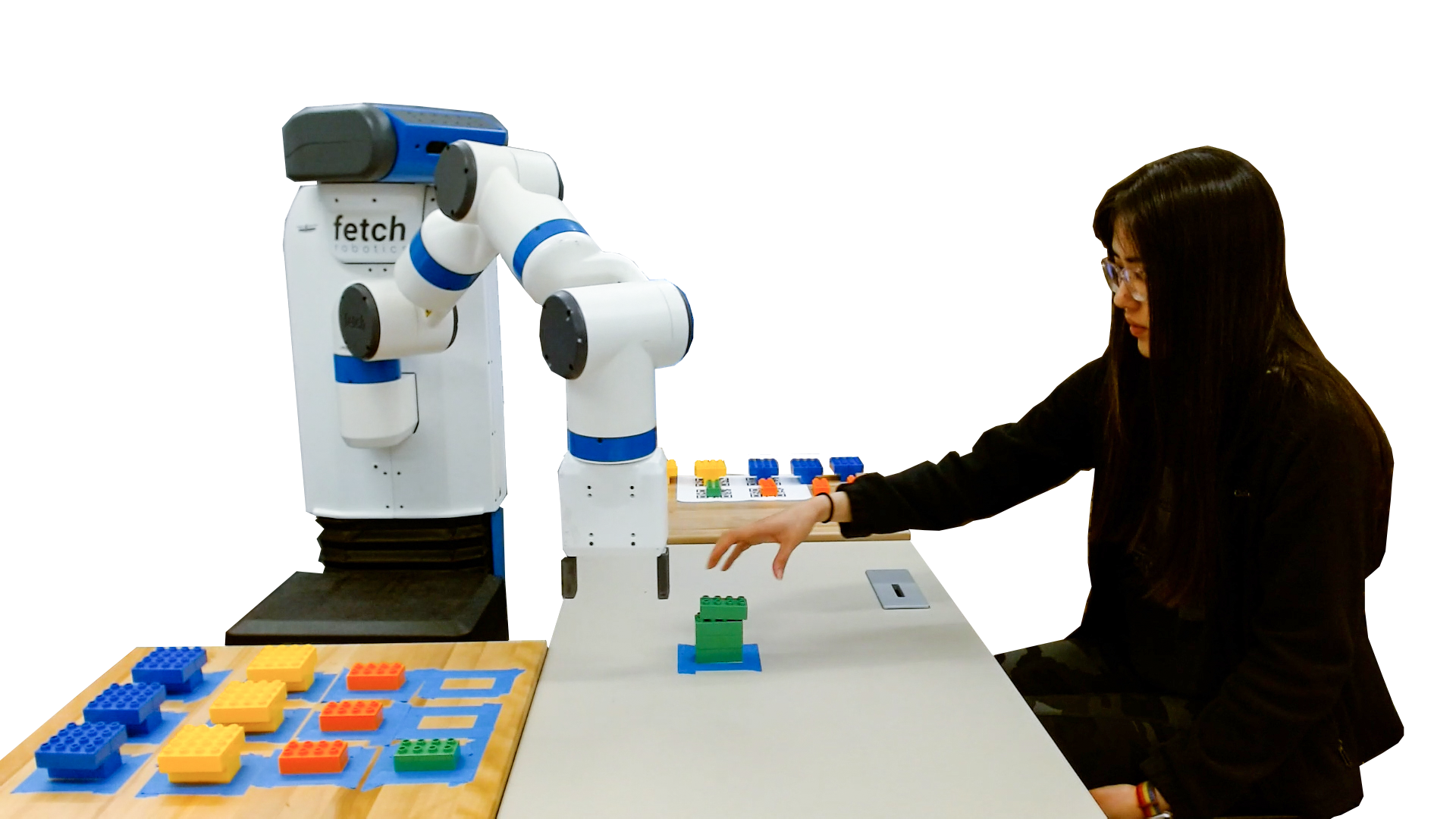

Collaborative Robotics Lab

Worked on the Fetch Robotic System, a research platform for human robotic interactions. The study focused on the idea of transparency, and whether an autonomous system should always reveal its true capabilities. For this project, I was responsible for creating an autonomous system that utilizes computer vision, motion plans, ROS, and webserver to create a seamless interaction between the robot and the user.

User Study

For the user study, we provided four different options from which the user can select from. The goal behind the user study was for the user and robot to collaborate with each other. The user would select a move, and that would trigger an action from the robot. An important aspect of the robot system is that the robot selects its move given the same information as the user. Before the user study begins the user is told that the robot is either capable or confused. If capable, the robot has the ability to select all the blocks, while if confused, the robot can only select a subset of the blocks. In the backend, the robot is running an algorithim that is either transparent or opaque. The transparent algorithim was rewarded more for picking moves the communicated the type of robot(confused or capable) while opaque was rewarded solely based on the highest reward.

Transparency

The idea behind transparency is that the robot selects the move that a confused robot would not be able to select. This immediately reveals to the user the type of the robot. By making a robot transparent, it ensures that the user is aware of the robot's capabilities. In our scenario, the transparent move provided the greatest potential of reward. However, the reward is only achieved if both the user and robot select the same block.

Opacity

The idea behind opacity is that the robot selects a move that both the capable and confused robot can select. This would be considered a safe move since it is known that no matter what the robot has the ability to select this move. In our scenario, the opaque move provided a lower reward but still higher than no collaboration.

Development

The user study involved a variety of different technolgies to get interactions between the different systems working. The first step was detecting the move chosen by the user. To do this a computer vision, algorithim was used to detect when the block was moved off the QR codes placed below. Once the block is selected, it runs through an algorithim to calculate the move for the robot. The move is then sent to the robot using a Flask server which communicates with the actuators on the robot using ROS. The robot has pre-determined motion plans for specific mapped positions to allow for easy selection of blocks. The final part is the GUI that was made using Tkinter to display the realtime score to the user.